Task Matrix

My dissertation investigated means to program behaviors on humanoid

robots. Programming humanoid robots to execute arbitrary tasks

is difficult for numerous reasons, including high

kinematic redundancy, complex dynamics, and the problems involved

with balancing and locomotion. Additionally, once behaviors have

been developed, "porting" a program to a robot with even

slightly different kinematics or dynamics is generally quite

difficult.

Consequently, behaviors

are typically written anew, thus ignoring one of the key tenets of

software development, component reuse.

The Task Matrix (from the definition for matrix as a

surrounding medium or structure) framework promotes portability of

humanoid behaviors by providing a consistent interface to

access robot hardware; in collaboration with

Honda Research Institute, USA, I have developed robot-independent

behaviors such as pointing, reaching, and fixating.

My dissertation examined how the Task Matrix can be used to perform

occupational tasks (i.e., tasks performed in the workplace). I

implemented a large subset of the MTM-1 work measurement system's

task primitives; MTM-1 is a proven system for decomposing

occupational tasks into primitives. Thus, a full implementation of

MTM-1 provides some measure of completeness over the space of

occupational tasks. The combination of the robot-independence

provided by the Task Matrix framework and the approximate completeness

offered by the MTM-1 inspired behaviors results in a constantly

improving set of behaviors for performing useful tasks.

Videos from research into the Task Matrix

Waving

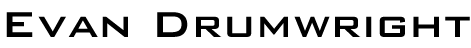

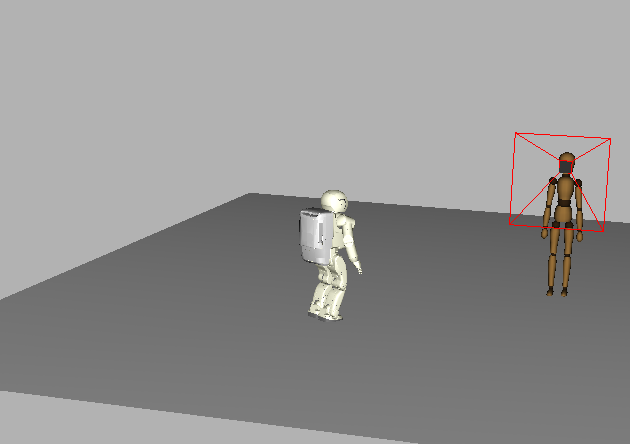

The following two movies show Asimo and the mannequin waving using a

"canned" (i.e., free-space movement) task program.

NEW! Physically embodied Asimo waving:

Fixating

Videos showing both robots focusing their gaze on moving objects.

fixate obviously works on unmoving targets as well. Note that

the fixate program attempts to use the degrees-of-freedom of both

the base orientation and the neck; if not possible (e.g., the base DOF

are currently being used for reaching), only the DOF for the neck are used.

Asimo focusing its gaze on a rolling tennis ball.

|

The mannequin focusing its gaze on a rolling tennis ball.

|

Asimo fixating on another, walking Asimo.

|

The mannequin fixating on a walking Asimo.

|

NEW! Physically embodied Asimo fixating on a wine flute (augmented with

a marker to aid in object recognition). The goal of the

task is to keep the cup centered in Asimo's camera display. The movie on

the right shows Asimo's viewpoint:

Pointing

Pointing is a sophisticated behavior that conveys intention, trains the

sensors, and is useful for communication. The video on the left shows

the simulated robot pointing (one arm points while the other trains an

arm-mounted camera on the target), and the video on the right shows the

physically embodied robot pointing; the object to be pointed to is a

wine flute (augmented with a marker to facilitate recognition).

Reaching

Reaching is used to prepare the robot to grasp an object. Multiple

valid pregrasping configurations for the hand may be specified to

address the scenario when one or more configurations is unreachable.

"Interactive" reaches are shown below. Each time the robot reaches to

the tennis ball, it is moved to a new location. This video shows

that motion-planning is used (in real-time) to navigate

around obstacles.

NEW! Physically simulated Asimo reaching (to 15cm) away to a wine flute.

We are currently getting this behavior working on the robot.

Grasping

Videos showing Asimo grasping a couple of objects. No videos of the

mannequin are shown grasping.

Releasing

A video showing Asimo releasing a grasped object. No videos of the

mannequin are shown grasping.

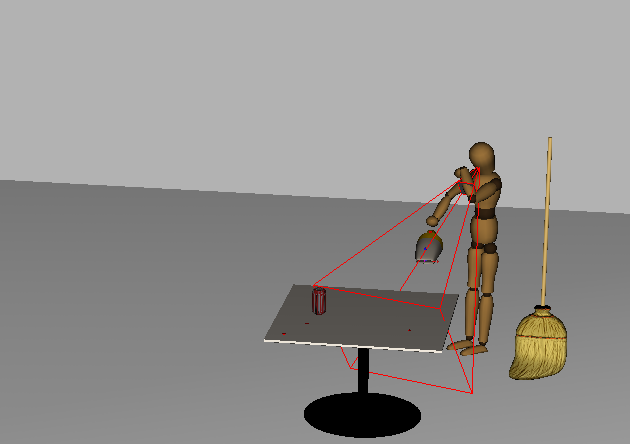

Exploring the environment

The Task Matrix utilizes a passive sensing model to allow task programs

to query the environment. The model of the environment is typically kept

updated by fixating on manipulated objects, but the model must be

constructed to map object locations for manipulation and collision

avoidance. The explore task program performs such an initial

construction.

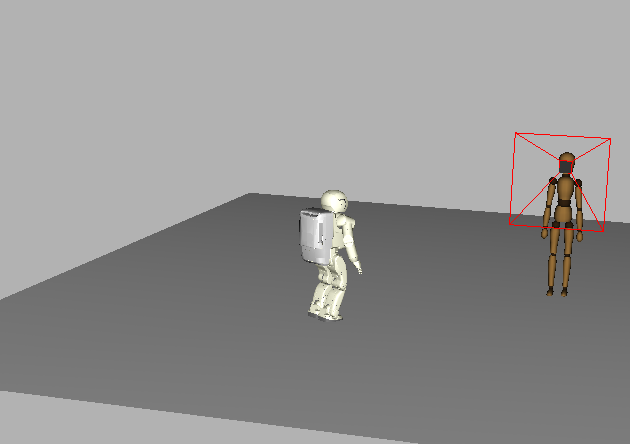

The time-lapsed videos below show the

environment being modeled using the robots' simulated sensor (the red

tetrahedron-like wireframe emanating from the robots' heads). When the

sensor models part of a surface, a brown texture appears on that surface.

Note that because only the kinematics of the robots in the videos are

simulated, locomotion is depicted in an unrealistic manner (sliding

across the floor rather than walking). This behavior will not require

any porting, however, to effect locomotion on a dynamically simulated

or embodied humanoid.

Picking up an object

We constructed a complex behavior for picking up an object using three

primitive task programs: reach, grasp, and fixate.

The robot fixates on the target object while simultaneously planning how

to reach to it. After planning is complete, the robot reaches to the

object while simultaneously still attempting to fixate on it. When the

object is graspable, the robot grasps it, which also terminates the

fixate program.

The state machine for performing the pickup behavior.

|

Asimo picking up a tennis ball.

|

Asimo picking up a vacuum.

|

The mannequin picking up a vacuum.

|

Putting down an object

The putdown task program is analogous to pickup; it uses

position, release, and fixate to place an object onto

a surface. However, there are a couple of significant differences.

Putdown uses two conditions, near and above, to

determine valid operational-space configurations to place the target

object. Second, the fixate subprogram focuses on the surface

rather than the grasped object.

The state machine for performing the putdown behavior.

|

Asimo placing a tennis ball on a table.

|

Asimo placing a vacuum on a table.

|

The mannequin placing a vacuum on a table.

|

Vacuuming a region of the environment

The vacuum program is a complex task program consisting not only

of the primitive task programs position and fixate, but also

of the complex task programs pickup and putdown. It commands

the robot to pickup a vacuum, vacuum a region of the environment, and

put the vacuum down when complete. The position subprogram uses

the above condition to guide the tip of the vacuum above the debris

to be vacuumed.

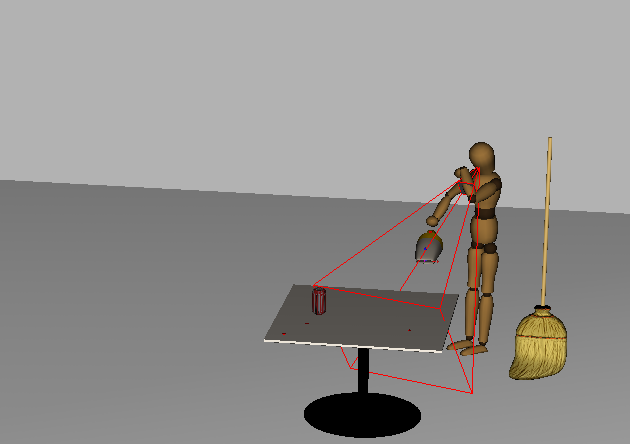

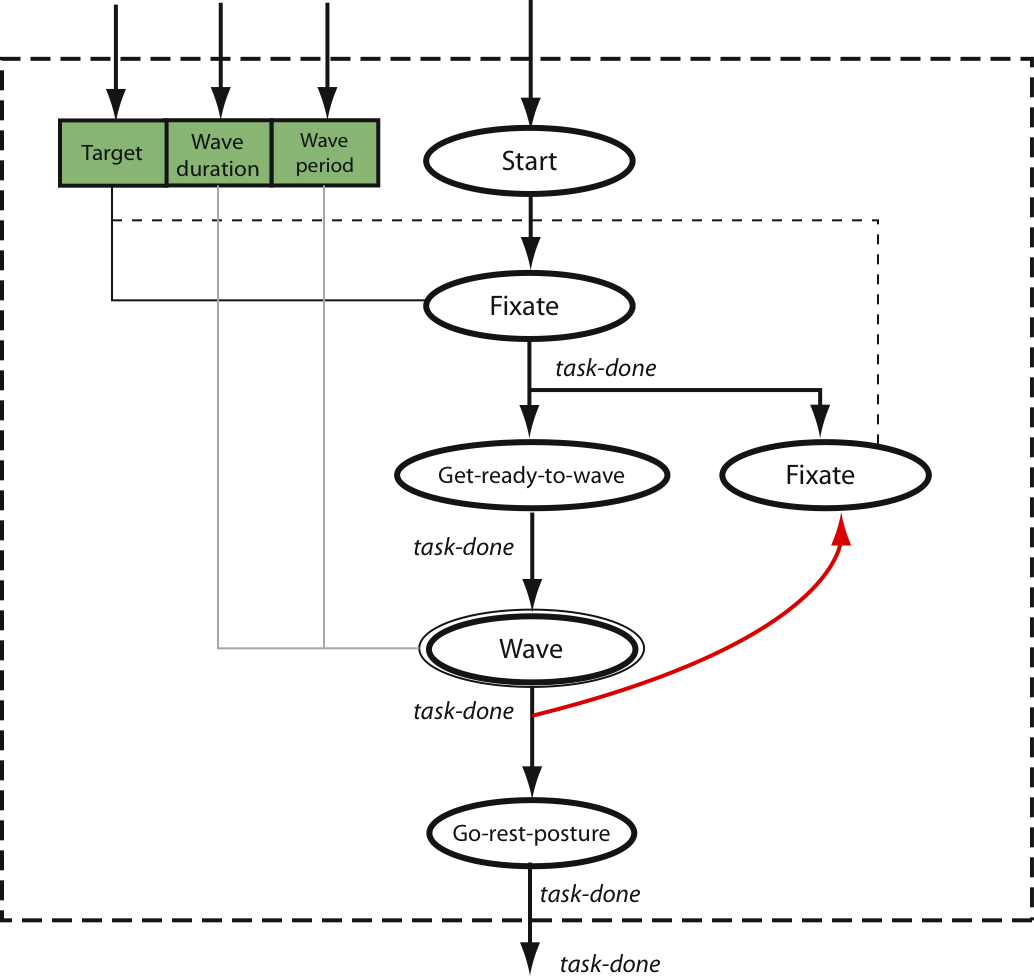

Greeting a humanoid

The greet behavior is a complex task program composed of the

primitive subprogram fixate and programs for preparing to wave

(i.e., a postural task program) and waving (i.e., a

canned task program). Greet first focuses the robot's

gaze on the target humanoid. When it has focused completely, it brings

the arm up and begins waving. When waving is complete, the robot reverts

to a rest posture and stops tracking the target robot. See

[1] for information on video artifacts.

The state machine for performing the greeting behavior.

|

Asimo greeting a fellow, walking Asimo.

|

The mannequin greeting a walking Asimo.

|

Relevant publications:

- Victor Ng-Thow-Hing, Evan Drumwright, Kris Hauser,

Qinjuan Wu, and Joel Wormer. "Expanding Task Functionality

in Established Humanoid Robots". Proc. of the IEEE-RAS

Intl. Conf. on Humanoid Robotics, 2007.

- Evan Drumwright. "The Task Matrix: A Robot-Independent

Framework for Programming Humanoids". Ph. D. thesis, The

University of Southern California, 2007.

- Evan Drumwright, Victor Ng-Thow-Hing, and Maja Mataric'.

The Task Matrix Framework for Platform-Independent Humanoid

Programming. Proc. of IEEE-RAS Intl. Conf. on Humanoid

Robotics. Genova, Italy. December, 2006.

- Evan Drumwright, Victor Ng-Thow-Hing, and Maja Mataric'.

Toward a Vocabulary of Primitive Task Programs for Humanoid

Robots. Proc. of the IEEE Intl. Conf. on Development and

Learning (ICDL). Bloomington, IN. May-June, 2006.

- Evan Drumwright and Victor Ng-Thow-Hing.

The Task Matrix: An Extensible Framework for Creating Versatile

Humanoid Robots.

Proc. of the IEEE Intl. Conf. on Robotics and Automation

(ICRA). Orlando, FL. 2006.