CSCI 1111

Introduction to Software Development, Fall 2022

GWU Computer Science

Introduction to Software Development, Fall 2022

GWU Computer Science

In this project, you will write a Java program that will analyze a dataset of tweets. There are two basic tasks that we'll accomplish:

Unlike previous assignments in this class, this project is a lot more open-ended with fewer instructions: implementation details are left to the students, and you may use resources you find online, as long as you cite them (but you may not have other people write the project for you, breaking the spirit of the assignment). This project is a small, but realistic research project on a real-world dataset, and is meant to be fun and give you an idea of some of the interesting applications of the ability to program and process data.

Because so much of the implementation is left up to you to figure out, we are devoting class time this semester to work on the project together, as we're also looking forward to your questions on Piazza!

While you're working on this project, remember to have FUN! If you're stuck on the same thing for 15 minutes without making any progress, please raise your hand in class, ask a neighbor, or message us on Piazza!

The dataset we'll be using is a Twitter dataset automatically collected by researchers at Stanford University (if you're interested in more details, they've published an academic paper on their work with this dataset).

WARNING: the Twitter dataset was automatically collected by researchers using the TwitterAPI; as such, it has not been screened nor sanitized to remove potentionally offensive and/or objectionable material. We are not condoning nor endorsing any of the material in the dataset by using it in this project.

If you feel

uncomfortable using this Twitter dataset for this project, please feel free to download any of the Plain Text UTF-8

text files from any of the 60,000 free Ebooks in the public domain from Project Gutenberg

to use instead for this project.

Altrenatively, if you have your own dataset of English sentences (at least a few hundred, with some associated metadata for each sentence) you wish to analyze, you are welcome to use that as the basis for this project -- please post a message on Ed if you wish to do so, so we can review your materials and make sure they will be compatible with the rest of this project.

Their dataset can be downloaded with the link here, and should be extracted to a local directory on your computer where you will work on this project. The download contains two files -- a training dataset of 1,600,000 tweets, and a test set of a few hundred. We'll focus on using the test dataset, as the other file is very large and difficult to open with something like MSExcel or a text editor. You can delete their large training file, but it might be interesting to run your analysis on a larger subset of tweets later on.

Use a text editor or MSExcel to open the test dataset and take a look at the file format -- we will be extracting the

tweet itself, the username, and the timestamp of the tweet for our analysis. Notice that the file is in a csv

(comma separated variable) format.

You can download any of the Plain Text UTF-8

text files from any of the 60,000 free Ebooks from

Project Gutenberg to use instead for this project. Each such text file typically contains a table of contents at the

beginning, where each line in the table of contents appears as a chapter heading later in the file (if the book you

happened to pick doesn't follow this format, pick a different one).

Next, in a separate file called Driver.java declare a main method that you will complete

in order to read in the .csv or .txt file you downloaded. Practice using Google (or other resources) to find a way

to read in a file, line-by-line, using a while loop, and print the results to the screen (for now). As a warning, most likely

the code to do this requires you using a try-catch block to handle exceptions; we haven't learned about this yet

in class, so please feel free to post questions to Ed if you need help. If you get a compilation error around the code you use

to open a file, make sure that you have a try-catch block around those lines.

Sentence classNext, let's write a class called Sentence that has at least the following methods:

public Sentence(String text, String author, String timestamp): a constructor that sets three attributespublic getters and setters getText, setText, getAuthor,

setAuthor, getTimestamp, and setTimestamp, with argument and/or return types

matching those passed in to the constructor.public String toString(): returns a human-legible string representation of the current object in the format

{author:kinga, sentence:"Hello CSCI 1111!", timestamp:"May 11 2009"}{author:janeausten, sentence:"Mr. Bennet was among the earliest of those who waited on Mr.

Bingley.", chapter:"Chapter 2"}Save your class in a file called Sentence.java. You can test your Sentence file by

running the Part1Tester.java file. You'll have to run your Driver manually to test it.

When you have finished, upload your solutions to BB -- ONLY ONE GROUP MEMBER should upload the solution. Please make sure to do this by the deadline (12/01 at 11:59pm). If you finish this project early in this lecture, move on to Part 2 below.

GRADING RUBRIC for Part 1:| Test cases passed in tester | 13 points |

| Uses good coding style and formatting (as discussed in lecture) for both files: java -jar checkstyle-9.2.1-all.jar -c ./CS1111_checks.xml Sentence.java java -jar checkstyle-9.2.1-all.jar -c ./CS1111_checks.xml Driver.java | 4 points |

main method opens a file correctly and prints out its contents line-by-line using a loop | 4 points |

We're going to write some code to store each sentence and its metadata as an object in an ArrayList. Declare such an object,

and modify your loop in main to store each Sentence object in the ArrayList, using generics to

indicate the correct type of object stored. For now, just add an empty Sentence to the list each time you read in a line;

in a minute, we'll discuss how to use some other code you'll write to create Sentence objects from the input file lines.

Whether you're working with tweets or books, we'll need to write code that is able to extract the three pieces of data we want from each sentence/tweet: the sentence/tweet, the author (either a twitter user or the author of the book), and temporal information about the sentence (either the timestamp of the tweet, or what chapter the sentence comes from in the book). See instructions below for one of those two options:

Option 1: Twitter dataset

In your while loop that reads in a line of the csv, you'll need to write code to split the line by commas to extract the

three desired pieces, store them in a Sentence, and add that sentence to your ArrayList.

This code to process a line of the csv will be used by the convertLine method you'll write in a bit; you can scroll below to see how it is declared; here, we will explain what most of its content will look like. For example, the 5th line of our

input file is

"4","7","Mon May 11 03:21:41 UTC 2009","kindle2","yamarama","@mikefish Fair enough. But i have the Kindle2 and I think it's perfect :)"

and you should extract three pieces as:

May 11 2009

yamarama

@mikefish Fair enough. But i have the Kindle2 and I think it's perfect :)

Note that you have already written most of this code as Problem 8 in Homework 5; you should

recycle that code and add a few lines to just keep the pieces of the input here you want, and

return the result as an ArrayList. You'll also need to process

the timestamp to retain just the month, day, and year. In other words, you'll need to convert Mon May 11 03:21:41 UTC 2009 to be May 11 2009. Think about what you could split over to

get the right pieces here.

The tweet and the username will always be the last two entries on a comma separated line;

note that the full dataset comes with a number of optional query keywords in between the timestamp and the

username that we will ignore for this project. You may want to figure out a way how to index into

pieces correctly to get the three pieces you want; they are not always going to be at the same

index into that array, depending on how many optional keywords the input line has; your code could

dynamically calculate the last index, and the penultimate index, in order to reliably pull those items out.

Option 2: EBook dataset

While the Ebook dataset doesn't have commas that you need to split on, you will need to figure out a way to use the

while loop that reads in one line at a time to 1) Identify the end of one sentence (with a period) and the start of a

new one; 2) glue sentences together across multiple lines read in from the file; and 3) look for the chapter number

appearing on a line read in and note that all the subsequent sentences are from that chapter. For example, the following paragraph

from the Preface of Pride and Prejudice:

The artist is the creator of beautiful things. To reveal art and

should be processed into the three sentences:

conceal the artist is art’s aim. The critic is he who can translate

into another manner or a new material his impression of beautiful

things.

The artist is the creator of beautiful things.

To reveal art and conceal the artist is art’s aim.

The critic is he who can translate into another manner or a new material his impression of beautiful things.

For the latter, we

recommend you manually edit the file to remove everything before the Table of Contents, and manually copy the

table of contents into an ArrayList of Strings that you can reference to see if and when

a line you read in from the file matches one of those known chapters.

Whether you're reading in tweets from the csv or literary sentences, you'll notice both these formats

contain double quotations: you should REMOVE all double quotations from all the fields you store using

methods

available in the String library. For example, the tweet @mikefish Fair enough. But i have the Kindle2 and I think it's perfect :) should become something like @mikefish Fair enough But i have the Kindle2 and I think its perfect . Use the same approach to remove all punctuation (periods, commas, etc.),

but be aware if you are removing periods, this can be unexpectedly tricky -- ask for help! If you want to replace the period using replaceAll

you'll want to do replaceAll("\\.", "") instead of replaceAll(".", "") -- the latter will delete the entire tweet due to a special

behavior of the period as a regular expression as an argument to the method.

In order to processes the sentences from the file, add one of the following two methods to your Sentence

class:

public static Sentence convertLine(String line) that converts a line

from the input file into a Sentence object that it returns. This method should perform all the

preprocessing necessary for the toString method to easily print out the three fields without

any additional processing (write the body of that method now if you haven't yet). This includes cleaning the timestamp

field to have just the month, day, and year.public void addToEnd(String piece) that is used to

add to an existing Sentence object additional parts of a sentence that were on the next line

when reading in the file. Note that when processing the chapters, you will have cases where you'll need to

add a part of a sentence to the previous sentence, and declare a new sentence, for a single line in the file.You'll need to connect one of the methods above to your main method, so that the input can be processed, line-by-line,

into an ArrayList of Sentence objects (you've already set that loop up).

ExtractTest.java, write at least two test cases in a main method:

toString expects it to be

Your test cases should demonstrate this functionality by passing in a String to the convertLine

method and making sure that the Sentence is correctly captured. Use print statements to

print out the results of comparing one Sentence object to another that holds the desired answer.

Processing the Ebook dataset is a bit more complex than the Twitter dataset (and harder to write test cases for with what we know so far), so you may omit writing tests if you are working on that topic -- just make sure to at least visually verify that you can correctly read in the first few sentences of the first three chapters of the book.

You can test your Sentence file by

running the appropriate part of the Part2Tester.java file. You'll have to run your Driver manually to test it.

When you have finished, upload your solutions to BB -- ONLY ONE GROUP MEMBER should upload the solution. Please make sure to do this by the deadline (12/06 at 11:59pm). If you finish this project early in this lecture, move on to Part 3 below.

Sentence class convertLine/addToEnd method works correctly

together with reading in a file line-by-line (correctly stores timestamp, removes all double

quotes, handles tweets with commas/handles sentences across multiple lines) | 15 points for Ebook; 9 points for Twitter (3 per test case in tester) |

ArrayList of sentences in main is correctly declared using generics, and Sentence objects

are added to it correctly. | 3 points |

| At least two tests correctly written, as specified above | 6 points (Twitter only) |

As we just saw, dataset identification, collection, and pre-processing can be one of the most difficult parts of

a research project! Now that we have our sentences and their metadata neatly placed into an ArrayList

of Sentence objects, the fun (and easier) part can start!

For this section, we want to know what are the most common topics across all of our sentences. Taking this pulse could have a number of real-world applications; for example, people have studied tweets about COVID to try to track new outbreaks that happened in 2020, before the widespread availability of PCR tests, by looking at what symptoms were being reported in which locations.

Here, we're going to work on splitting up sentences into words, doing a little cleaning, and then making a list of

the most common individual words or phrases across our dataset. You're free to implement all of these pieces manually

using what we've learned this semester and the String library, or, you can look online for NLP libraries

that accomplish this sort of thing already (make sure you cite your sources with comments in the code if you do this!).

First, let's modify your Sentence class to add a method that takes the text of the sentence,

and returns an ArrayList that contains the words in the sentence. You should reuse the code you wrote for the last problem in Homework8 here; make it a method of the Sentence class called splitSentence() that no longer takes arguments and instead

operates on the text stored in the class.

Next, write a method called public static HashMap<String,Integer> printTopWords in your Driver that

takes as argument an

ArrayList of Sentences, extracts the words for each sentence, and updates a HashMap object that keeps track of

how many times each word appeared in the entire dataset. Note that you must store Integers in the HashMap,

not ints, so you should declare it as HashMap<String, Integer>.

Once you have written that code, call it in your main method to obtain the HashMap of word counts (think about what you need to pass into it as an argument to glue everything together,

and what to do with what the method returns). Then,

copy the code below into your main method to have it loop through the HashMap and print out all its entries:

import java.util.Map; //place with imports

import java.util.Collections; //place with imports

Map.Entry<String, Integer> maxEntry = null;

for (Map.Entry<String, Integer> entry : YOUR_HASH_MAP.entrySet())

if (maxEntry == null || entry.getValue().compareTo(maxEntry.getValue()) > 0)

maxEntry = entry;

int maxValueLen = maxEntry.getValue().toString().length();

ArrayList <String> results = new ArrayList<String>();

for (Map.Entry set : YOUR_HASH_MAP.entrySet()){

String value = set.getValue().toString();

while(value.length() < maxValueLen)

value = " " + value;

results.add(value + " of " + set.getKey());

}

Collections.sort(results);

Collections.reverse(results);

for (int i = 0; i < results.size() && i < 100; i++)

System.out.println(results.get(i));

Once again, we'll cover what this code does in CSCI 1112! In the meantime, you'll need to modify the code

above to change YOUR_HASH_MAP to whatever you called your HashMap variable.

Run your main method in the Driver to see if it works!

You probably noticed in your printout that a lot of words were not very interesting (like "as" and "the"). In NLP, we call these stopwords, and we usually remove them before analyzing the words we collect from our sentences.

People have compiled various lists of stopwords, and we're going to use one example (below) -- you could also get

fancy and import other lists from various NLP libraries if you wanted, or just copy the array below into your

splitSentence method:

String[] stopwords = {"a", "about", "above", "after", "again", "against", "all", "am", "an", "and", "any", "are", "aren't", "as", "at", "be", "because", "been", "before", "being", "below", "between", "both", "but", "by", "can't", "cannot", "could", "couldn't", "did", "didn't", "do", "does", "doesn't", "doing", "don't", "down", "during", "each", "few", "for", "from", "further", "had", "hadn't", "has", "hasn't", "have", "haven't", "having", "he", "he'd", "he'll", "he's", "her", "here", "here's", "hers", "herself", "him", "himself", "his", "how", "how's", "i", "i'd", "i'll", "i'm", "i've", "if", "in", "into", "is", "isn't", "it", "it's", "its", "itself", "let's", "me", "more", "most", "mustn't", "my", "myself", "no", "nor", "not", "of", "off", "on", "once", "only", "or", "other", "ought", "our", "ours ourselves", "out", "over", "own", "same", "shan't", "she", "she'd", "she'll", "she's", "should", "shouldn't", "so", "some", "such", "than", "that", "that's", "the", "their", "theirs", "them", "themselves", "then", "there", "there's", "these", "they", "they'd", "they'll", "they're", "they've", "this", "those", "through", "to", "too", "under", "until", "up", "very", "was", "wasn't", "we", "we'd", "we'll", "we're", "we've", "were", "weren't", "what", "what's", "when", "when's", "where", "where's", "which", "while", "who", "who's", "whom", "why", "why's", "with", "won't", "would", "wouldn't", "you", "you'd", "you'll", "you're", "you've", "your", "yours", "yourself", "yourselves"}; //from https://www.ranks.nl/stopwords

Now, write code that checks every word that you found when splitting the sentence by spaces against this list; if the word

is found in this list of stopwords, don't include it in the ArrayList of words you're going to return. You should recycle the code you wrote for the final Quiz4 version Clean

Array by modifying your answer to work on arrays of strings.

You'll notice that the stopwords are all lowercase, but your words may not be; use the .toLowerCase() method

from the String class to convert your words to all lowercase before checking if they appear in this list of

stopwords. Now run your main method again to call splitSentence and check to make sure that

the stopwrds were removed correctly -- what do the most common topics look like now?

You may notice that some words/topics may be repetitions of another word; perhaps they are synonyms, or they are the

same word but with different plurarity (cat vs cats, for example). In NLP, it is common to perform tasks of stemming

and/or lemmatization to ignore issues like the latter. If you're feeling very ambitious, feel free to look up a Java

library that can do this sort of thing for you (at the sentence level). Otherwise, you can add further pre-processing

to manually convert a plural into a singular using the .replaceAll() method of the String class.

Feel free to also modify the stopword list manually to get rid of any additional words you don't think are important.

While it's awesome that we're able to model the topics of the tweets (or Ebook) using the most common

words in all the sentences, sometimes what we're really after isn't a single word, but a phrase made up of

multiple words (such as "machine learning" or "out of bounds"). Taking a look at your results from the

previous section, are there any such common words that might have been phrases instead? Try to see if you can

improve your model by, when processing consecutive words left over after stopwords have been removed, considering

each consecutive pair of words as a phrase (a bi-gram), and counting these frequencies in your HashMap as well. Note: some

phrases may be non-sensical, but they will also not appear commonly enough to make it to the top 100 we print.

Repeat this process for triplets (tri-grams) if you wish.

You can test your Sentence file by

running the appropriate part of the Part3Tester.java file. This

tester cannot test the extra credit.

When you have finished, upload your solutions to BB -- ONLY ONE GROUP MEMBER should upload the solution. Please make sure to do this by the deadline (12/06 at 11:59pm). If you finish this project early in this lecture, move on to Part 4 below.

| Sentences are correctly split over spaces | 2 points |

HashMap correctly stores the number of times each word appears in the dataset | 5 points |

The HashMap is printed out correctly, showing the top 100 words and their frequency | 5 points |

Stopwords do not appear in the HashMap | 5 points |

| Further word cleaning was performed | up to 5 points (extra credit) |

| N-grams and/or phrases were calculated | up to 30 points (extra credit) |

People are often interested in monitoring trends in natural language datasets, and one way to do that is to measure how positive or negative a sentence might be. For example, an automated mental health chatbot could be trained to monitor a conversation, and look for indicators of increasing depression. This sort of analysis is often extended to tweets, reddit posts, and other social media.

In this section of the project, we're going to learn how to install and use a Java library that will allow us to measure the sentiment (positive or negative emotion) in a sentence.

In order to allow our Java code access to libraries that are not a standard part of Java, we need to download

these libraries and make sure that our Java program knows how to find them. We can download these files by pasting the

urls below into your browser:

https://nlp.stanford.edu/software/stanford-corenlp-full-2018-10-05.zip

https://nlp.stanford.edu/software/stanford-english-corenlp-2018-10-05-models.jar

It will take several minutes to download these files --

please read ahead and study the code below while you're waiting.

Next, copy both files into the same directory where your Java files are. Extract the contents of the .zip file using the terminal commands (if the

first command doesn't work, you can unzip the way you would normally unzip files):

unzip stanford-corenlp-full-2018-10-05.zip

cd stanford-corenlp-full-2018-10-05

cp ejml-0.23.jar ..

cp stanford-corenlp-full-2018-10-05/stanford-corenlp-3.9.2.jar ..

cd ..

Make sure the .jar files end up copied into the same directory as your Java files.

Now that we've downloaded the Stanford CoreNLP libraries (note that they are files that end in .jar, which

stands for "java archive"), we can import these libraries at the top of our Sentence.java:

import java.util.Properties;

import org.ejml.simple.SimpleMatrix;

import edu.stanford.nlp.ling.CoreAnnotations;

import edu.stanford.nlp.neural.rnn.RNNCoreAnnotations;

import edu.stanford.nlp.pipeline.Annotation;

import edu.stanford.nlp.pipeline.StanfordCoreNLP;

import edu.stanford.nlp.sentiment.SentimentCoreAnnotations;

import edu.stanford.nlp.sentiment.SentimentCoreAnnotations.SentimentAnnotatedTree;

import edu.stanford.nlp.trees.Tree;

import edu.stanford.nlp.util.CoreMap;

In a minute we'll show you how to let the Java program that tries to run this file know where these libraries live on

your machine.

Next, add the code above at the top of your Sentence.java. Then, also add the code below

that defines a method that is able to score a sentence for sentiment using the library we just downloaded:

public int getSentiment(String tweet){

Properties props = new Properties();

props.setProperty("annotators", "tokenize, ssplit, pos, parse, sentiment");

StanfordCoreNLP pipeline = new StanfordCoreNLP(props);

Annotation annotation = pipeline.process(tweet);

CoreMap sentence = annotation.get(CoreAnnotations.SentencesAnnotation.class).get(0);

Tree tree = sentence.get(SentimentCoreAnnotations.SentimentAnnotatedTree.class);

return RNNCoreAnnotations.getPredictedClass(tree);

}

Let's go through what the pieces above are doing. Properties are flags that users can set to determine

what processing steps to apply to the sentence. Here, you can see where those properties are set with

setProperty, where a string is passed in contains different types of processing the sentence will be subject to.

In this case, you may recognize sentence splitting steps (tokenization) like we did manually above. You'll see the

last argument set here is sentiment, which instructs the library to return a sentiment score between 0

and 4 for the sentence

as either 0=Very Negative, 1=Negative, 2=Neutral, 3=Positive or 4=Very Positive. Sentiment analysis, like our topic modeling,

also requires that raw sentences are tokenized and pre-processed in order to make its judgements.

The library is called by creating a pipeline object, which passes the tweet though the processing

steps we just outlined. At the end of that, the next three lines of code are taking the processed sentence (recall,

it no longer looks like a human visible sentence) and passing it through a machine learning algorithm that has been

trained elsewhere in the library to try to guess what the sentiment of this particular sentence was. Pretty cool, and

all just in a few lines of code!

Now, we're ready almost ready to use this code to score sentences. First, since the getSentiment method lives

in the Sentence class, it doesn't need an argument: change the method to operate on the text

field of the Sentence class instead.

Next, in your main method loop to go through your ArrayList of sentences, and print out each sentence, along

with its sentiment score. You'll notice that the println method is actually calling your toString

method if you pass it your Sentence object -- this is a neat thing that Java does that you'll more about

if you take CSCI 1112.

You'll need to compile and run this part of the project from your terminal. First, open a terminal window, and change directory into the folder where your java files are (see the notes from lab the first two weeks of class for a refresher of how to use the terminal if needed). All three java files must also be there; you should check this by doing ls or dir from that directory. Your terminal should have all the listed files there (ExtractTest.java is optional), including the three jar files:

Finally, in order to run your code, you need to make sure that the .jar files we downloaded earlier are in a place

where Java can find them. There are many ways to set this up elegantly, but for now we'll just point the javac and java

programs to the paths of three .jar files the code above needs to run. Try the command below that applies to your operating system;

if it doesn't work and you've verified that all the files are in the current directory, see the part below for instructions on

how to unpack the .jar files and get it working:

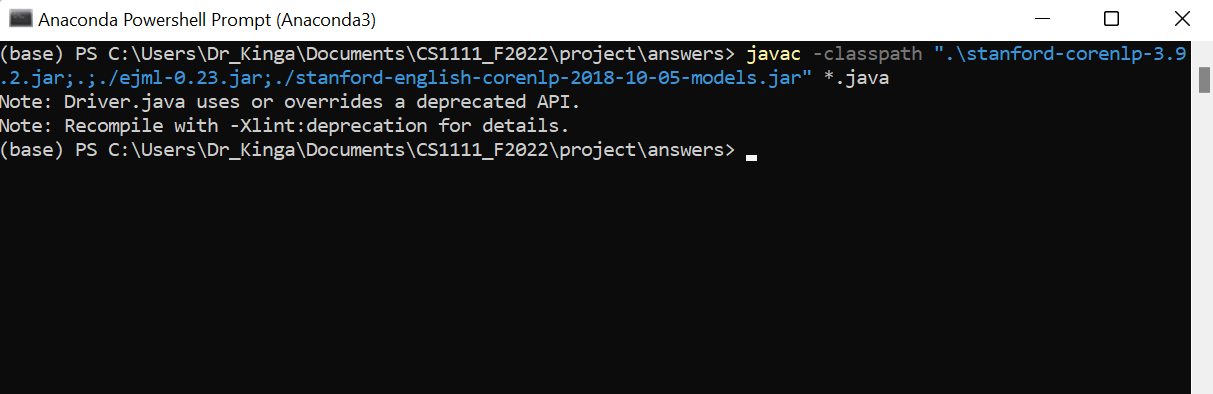

javac -classpath ".:./ejml-0.23.jar:./stanford-corenlp-3.9.2.jar:./stanford-english-corenlp-2018-10-05-models.jar" *.java for Mac/linux, or

javac -classpath ".\stanford-corenlp-3.9.2.jar;.;./ejml-0.23.jar;./stanford-english-corenlp-2018-10-05-models.jar" *.java for Windows.

and then

java -classpath ".:./ejml-0.23.jar:./stanford-corenlp-3.9.2.jar:./stanford-english-corenlp-2018-10-05-models.jar" Driver for Mac/linux, or

java -classpath ".\stanford-corenlp-3.9.2.jar;.;./ejml-0.23.jar;./stanford-english-corenlp-2018-10-05-models.jar" Driver for Windows.

You'll learn more about the classpath in CSCI 1112 as well.

What do you think about the sentiments it scored for all the tweets (or literary sentences)? Do you agree or disagree?

When you have finished, upload your solutions to BB -- ONLY ONE GROUP MEMBER should upload the solution. Please make sure to do this by the deadline (12/08 at 11:59pm). If you finish this project early in this lecture, move on to Part 5 below.

| Sentence.java compiles with all the changes above | 5 points |

getSentiment has been correctly modified to operate on the text field | 2 points |

| A loop has been written that correctly iterates through all sentences, printing them out, and displaying their sentiment scores | 5 points |

We now have a way to find the major themes/topics of a group of sentences, as well as the ability to

analyze the sentiment of these sentences. Next, we're going to have you write some code to be able to

filter your ArrayList of sentences to interesting subsets.

First, define and implement a method in the Sentence class called public boolean keep(String

temporalRange) that returns true or false depending on whether or not the

Sentence object is within either:

A date range such as "May 31 2009-Jun 02 2009" if you are using tweets, or

A chapter number such as "Chapter 2" depending on the Ebook you chose.

To get some practice with real-world programming and debugging, we recommend you use built-in Java libraries to convert

each date (that's a string) into an integer (long) where you can compare that one date is less than

and/or greater than another. These libraries often convert dates into the number of seconds since an arbitary date in the past,

so you can compare them with the less than and greater than operators, like normal integers. You can google (or use) any way

you'd like to make this conversion, but here are some examples:

Timestamps. SimpleDateFormat class to use in the solution above or ones like it;

check out its API to see how you can provide an expression for the format your dates are in.Timestamp object for each date, you can use its methods in its API to convert the timestamp into

the number of seconds.try-catch block to handle exceptions.Next, modify your code in main to use the keep method to generate a new

ArrayList that filters out tweets or sentences to just those in the temporal range specified.

Finally, using all the code you've written for this project, plan and then execute a further analysis of the dataset that answers one or more of the following potential questions, and/or investigates something similar (check with the instructor if you want to deviate from this list):

keep method that is able to filter out out sentences based on keywords,

rather than temporal ranges.keep in main,

write a paragraph summarizing your results, and include metrics to support your conclusions.

When you have finished, upload your solutions to BB -- ONLY ONE GROUP MEMBER should upload the solution. Please make sure to do this by the deadline (12/12 at 11:59pm). If you finish this project early in this lecture, move on to Part 5 below.

keep has been correctly implemented to handle a temporal range and/or a keyword | 5 points |

| All code submitted is properly indentend, uses good variable naming, and is appropriately commented | 5 points |

main has been correctly modified to generate filtered ArrayLists using keep. | 5 points |

| One of the two analysis above has been completed (or a third instructor-approved analysis), and a paragraph summarizing the results with metrics was provided. | 10 points |

| Any additional (instructor approved) analysis has been completed, and a paragraph summarizing the results with metrics was provided. | 10 points per analysis (extra credit) |

Great work on this project! If you're interested in learning more about Natural Language Processing, including

doing summer research with Dr. Kinga, feel free to drop her an email!

Otherwise, take a break before studying for finals!